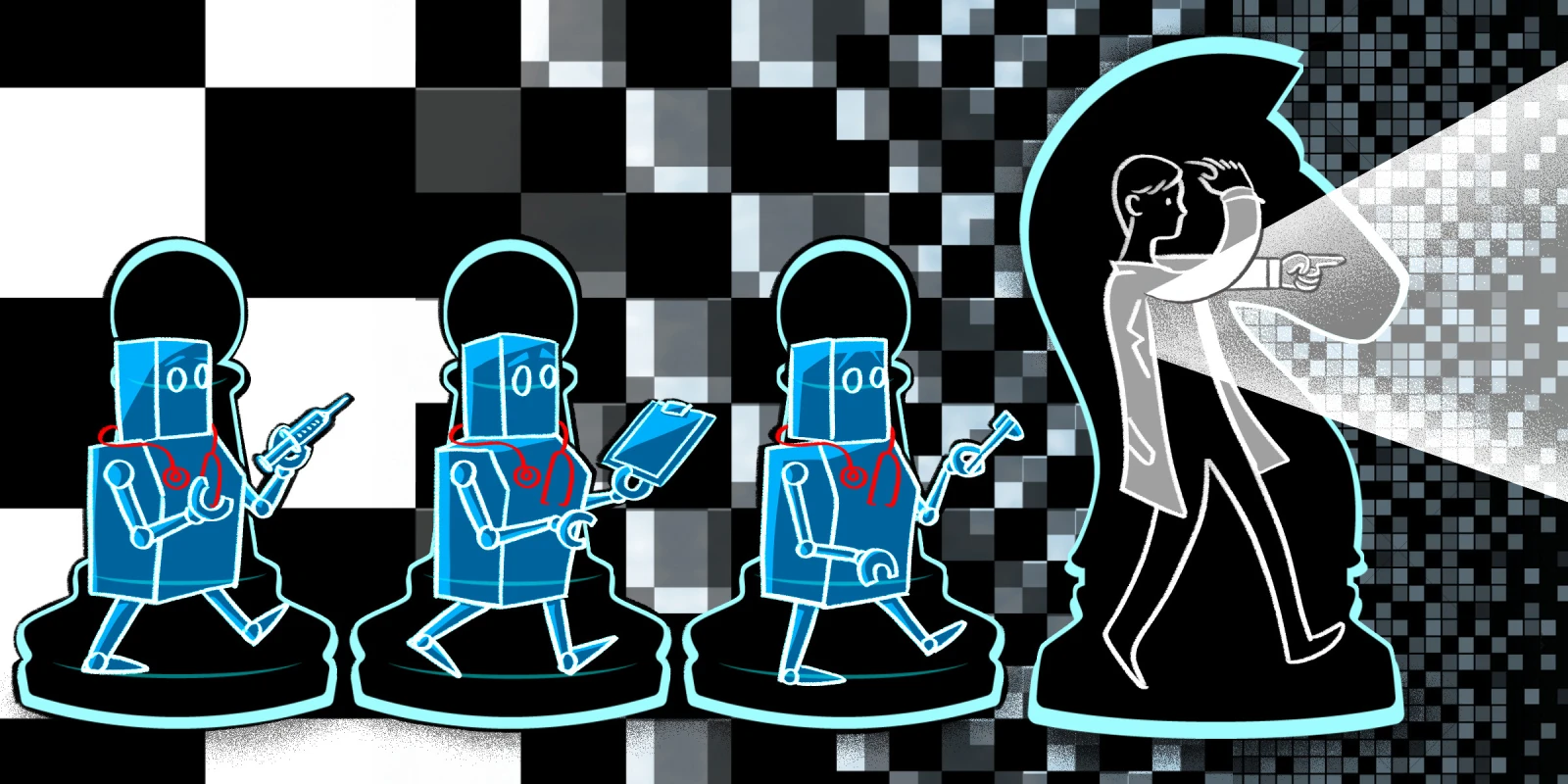

Imagine a future as a physician where your 15-minute visit with a patient is just that: when you leave the room, the note is already written, insurance information is already included, additional questions patients have can still be answered without your help. That could be the future of medicine with Chat Generative Pre-trained Transformer (ChatGPT), an application by artificial intelligence (AI) company OpenAI that launched its latest generation in late 2022 and set a record for reaching more than 100 million users in just two months.

I became one of those users myself when I created a free ChatGPT account in advance of my upcoming Objective Structured Clinical Exam (OSCE). I tested ChatGPT’s ability to act as a standardized patient by typing in the following instruction to the chatbot’s interface: “Act as a standardized patient that one would see while undergoing OSCE. Provide patient HPI with vital signs and labs.” The AI responded with an organized chief complaint of chest pain, HPI, vitals, and labs including troponins and electrolytes. Then, I asked it to provide a differential diagnosis, whereupon it provided five possible diagnoses and cited two as “less likely” due to “normal chest X-ray.” This simple test shows the value of ChatGPT in aiding trainees for educational purposes. I also tested ChatGPT’s usage in the clinic setting: I asked it to define acute kidney injury (AKI) in terms of varying levels of difficulty; to elaborate on the electrolytes that need to be monitored and the symptoms that would occur if they were not; and to write a letter to an insurance company with references to scientific journals on why an AKI patient would need time off from work. ChatGPT was able to do all of these things in no time at all.

While completing my tests on the bot, I was impressed not only with the accuracy of the information generated, but also with how I could see answers generated in real-time both to initial and to follow-up questions. In health care, there are many spaces and gaps where ChatGPT could make a long-lasting, meaningful impact; for example, I can foresee a future in which ChatGPT alleviates the administrative burden of physicians, which comprises roughly two hours of work for every hour of direct patient care. My hope is that with the aid of ChatGPT, physicians can better spend their days directly listening to and caring for patients.

Already, the future of medicine is changing at a rapid pace, with innovations that range from Apple Watches that can monitor heart rates to the rising popularity of telehealth visits. AI also plays a role in medicine at the medical, not just administrative and diagnostic level: currently, it can help segregate tumors in the brain or prostate for radiotherapy planning, produce clearer medical images for radiologists, and predict clinical outcomes of cancer patients. However, the fact that AI and machine learning are already being utilized in medicine does not mean we should proceed with ChatGPT wholesale. When a technology with such an exponential rise as ChatGPT emerges, one must consider not only the benefits, but also the potential pitfalls.

One concern of ChatGPT is that the medical information it generates may contain falsehoods or inaccuracies, given that these consumer tools were not trained on biomedical data nor tested by medical experts. In this vein, a study from January 2023 reports that scientists can be fooled by fake abstracts that are ChatGPT generated, which can undermine the credibility of science. Another important concern is that ChatGPT can increase racial bias and heighten existing inequality, given that it tends to have regressive bias: one example of a larger problem is that a “good scientist” is recognized by ChatGPT as a “white male.” Likewise, it is important to consider how ChatGPT can amplify health care inequalities in terms of who has access to these advanced tools. Finally, a concern of ChatGPT is that it could take over the administrative roles of health care workers, thereby leaving those workers unemployed — and on the flip side, could lead to potential loss of trust either through data hacking or through lack of contact with health care professionals.

Ultimately, as shown by Doximity itself rolling out a beta version of the ChatGPT tool, ChatGPT is here to stay. And there are other AI tools on the horizon, such as Microsoft's AI Bing search engine that calls itself Sydney — all or any of which may carve themselves a space in medicine. Personally, I’m excited to see how ChatGPT can alleviate the administrative demands of health care, enhance and expedite clinical care, and prioritize interactions with patients. At the same time, I worry that AI can exacerbate socioeconomic disparities that already exist and spread health care misinformation. Regardless, I believe that medicine would be remiss not to take advantage of AI’s potential. As we enter this new era of health care, it is imperative that both physicians and patients remain cautious and mindful of AI’s limitations.

Have you or will you use ChatGPT in your daily life as a clinician? Share your experiences and reasoning in the comments!

Ellen Zhang is a Harvard Medical Student who is passionate about using writing as a lens to unravel the complexity of medicine, enhance empathy, and provide humanistic care. She enjoys hiking, baking, and exploring local cafes. Ellen is a 2022–2023 Doximity Op-Med Fellow.