“When did your pain start?” I asked. The patient, a petite middle-aged man, did not appear to hear my question. He moaned, clearly uncomfortable. “On a scale of 1 to 10, how bad does it hurt right now?” Again, there was no response. I began to sweat; I was a first-year conducting my first interview and had only been told this patient’s chief concern was “generalized pain.”

The door opened. “Sorry, had to handle that,” my preceptor said, out of breath. “How’s my student treating you?” There was no response from the patient. I looked toward my preceptor as she surveyed the room. I could see her quickly piecing together the situation as she sighed heavily. “You'll need to help us help you,” she said to him. “Can you speak to us?” I shifted uncomfortably, hearing my teacher’s voice, her tone clipped, lacking the warmth I was used to. She looked back at me wearily. "Sometimes you just can’t get through. We see this often with certain disorders. But it looks like he’s alright.”

We left the room as the patient continued to moan uncomfortably, alone. My relief that I was off the hook was splashed with uneasiness. How did my preceptor know he was fine? Was it expertise or bias? Or both?

This first-year experience has stuck with me throughout my medical education. Thankfully, there have been continued, significant, and meaningful improvements in health care to address unconscious biases to improve patient outcomes. We have begun to measure and probe this murky, often hidden, layer of health care in interesting ways, quantifying its impact in places like the hallways of the ED, the clinic, and in how we allocate scarce pandemic resources. Still, sample sizes are often small, effects are limited, and causality is near impossible to find.

Interestingly, there exists one commonality among all implicit bias problems that we rarely seek to address: humans. Implicit bias at its core is an aspect of social cognition shaped by learned associations and social experiences. It’s a deeply human phenomenon, a negative externality resulting from our evolution as social beings and as information condensers. Until recently, the closest we could escape from this reality was by entrusting decisions to groups, where individual bias is reduced, or to algorithms, where the levers are in plain sight and implicit biases become explicit.

Today, a third option exists to mitigate implicit bias: artificial intelligence (AI). A clear example comes from a paper published in Nature in 2021 that explored “unexplained” racial disparities that exist in management of knee pain for patients with diseases such as osteoarthritis. Notably, these disparities persisted even after controlling for disease severity as graded by physicians using medical imaging. In the study, the researchers used deep learning, a form of AI, to read the medical images and evaluate severity instead of entrusting the task to humans. Suddenly, 43% of the racial disparity was explained by the AI model, nearly five times more than the 9% accounted for by humans. The authors conclude that “Because algorithmic severity measures better capture underserved patients’ pain, [...] algorithmic predictions could potentially redress disparities in access to treatments like arthroplasty.”

When I first read this paper, and the many other emerging AI applications like it, I was reminded of my attempted interview with the pain patient. AI holds the promise of better decision-making for those gray areas where understanding is limited, where our expertise is inextricably mixed with bias, where those who are racial minorities or otherwise underserved in medicine suffer the most.

In health care, we tend to view emerging technologies like AI with a high degree of skepticism. We are risk-averse for good reason; the decisions we make have the impact to radically alter people’s lives. But there is a difference between skepticism and the impossibly high standards of fairness and perfection we demand from AI. Over the past few years, a litany of research and news media has attacked the application of AI in medicine, with some calling it an “equity threat”, demanding regulation, and claiming “generalizable bias” in models is “escalating systemic health inequities.”

These concerns are not without merit. As recently as 2020, an algorithm which used private insurance data to predict patient follow-up was found to inappropriately allocate follow-up care, significantly harming Black patients. Yet even this research does not serve as an indictment of AI. Rather, it underscores the need for more rigorous development and deployment. Today, we frequently encounter models and research that are privatized in an attempt to avoid scrutiny. In addition, we often see a worrying profit incentive in commercial prediction algorithms — which typically comes at the expense of underserved populations. To rectify this, I believe data used for training health care models should be open-access and assessed continuously for fairness. Additionally, outcomes should be monitored vigilantly, with readiness to pull ineffective or harmful algorithms.

Today, AI is getting closer to seeing the world as it really is, as opposed to carefully crafted variables, numbers, and insurance data. Natural language processing and multimodality research are ensuring the next generation of AI algorithms will be trained on the same data doctors are: our eyes and ears. AI models have the potential to learn from these natural datasets in a focused and calculated way, free from the biases humans accumulate in their day-to-day lives outside of medicine.

Our health care system — which was built by humans, not AIs — is both deeply inequitable and inefficient. Health care today is run by millions of individual actors, each with their own implicit (and not always discriminatory) biases, e.g., the doctor who went to medical school in the Northeast, so they think Lyme must be the answer, or the psychiatrist who always gives escitalopram first line because that’s what their residency program did. It is impossible to measure all of our biases on the individual level.

At the same time, it can feel chilling to read that an algorithm has a measurable bias, that it, say, under-diagnosed minority patients at a rate of X% due to the way it was trained or the way it was implemented. But algorithmic bias is not worse than human bias just because we can measure it. In fact, that’s what makes it better, and a true hope for eliminating inequality in medical care. You can ask an algorithm how it would manage 1,000 different patients and adjust its training accordingly. You can find the bias in a dataset and correct it. With AI approaches we can query, test, and poke holes until we are confident that bias has a minimal impact in decisions. You cannot do that with a human. Yes, we must examine and correct the datasets AIs are trained on. But we must weigh them against the biased datasets — our personal interactions, our cultures, and our societal stereotypes — we humans are trained on our whole lives.

It is critical to identify and rectify issues of bias in AI systems. Principled research, open datasets, and replication are key to mitigating risks. No technology is a panacea, and even well-intended algorithms could compound injustices if implemented recklessly. But neither are humans immune to prejudice. AI, when implemented critically, with iteration and nurturing, is a solution for health care equity, not a threat. The risks are real, but the promise of AI for health care equality may finally be within reach. Let’s not let the perfect get in the way of the great.

Do you utilize AI in your practice? Share your experience in the comments!

Aditya Jain is a third-year medical student at Harvard Medical School. His previous works include "The Future is STEM" and medical fiction shorts for In Vivo Magazine. He is a published researcher on the applications of artificial intelligence in medicine. When he's not busy with rotations, he enjoys playing guitar, reading sci-fi, and nature hiking. He tweets @adityajain_42. Aditya is a 2023–2024 Doximity Op-Med Fellow.

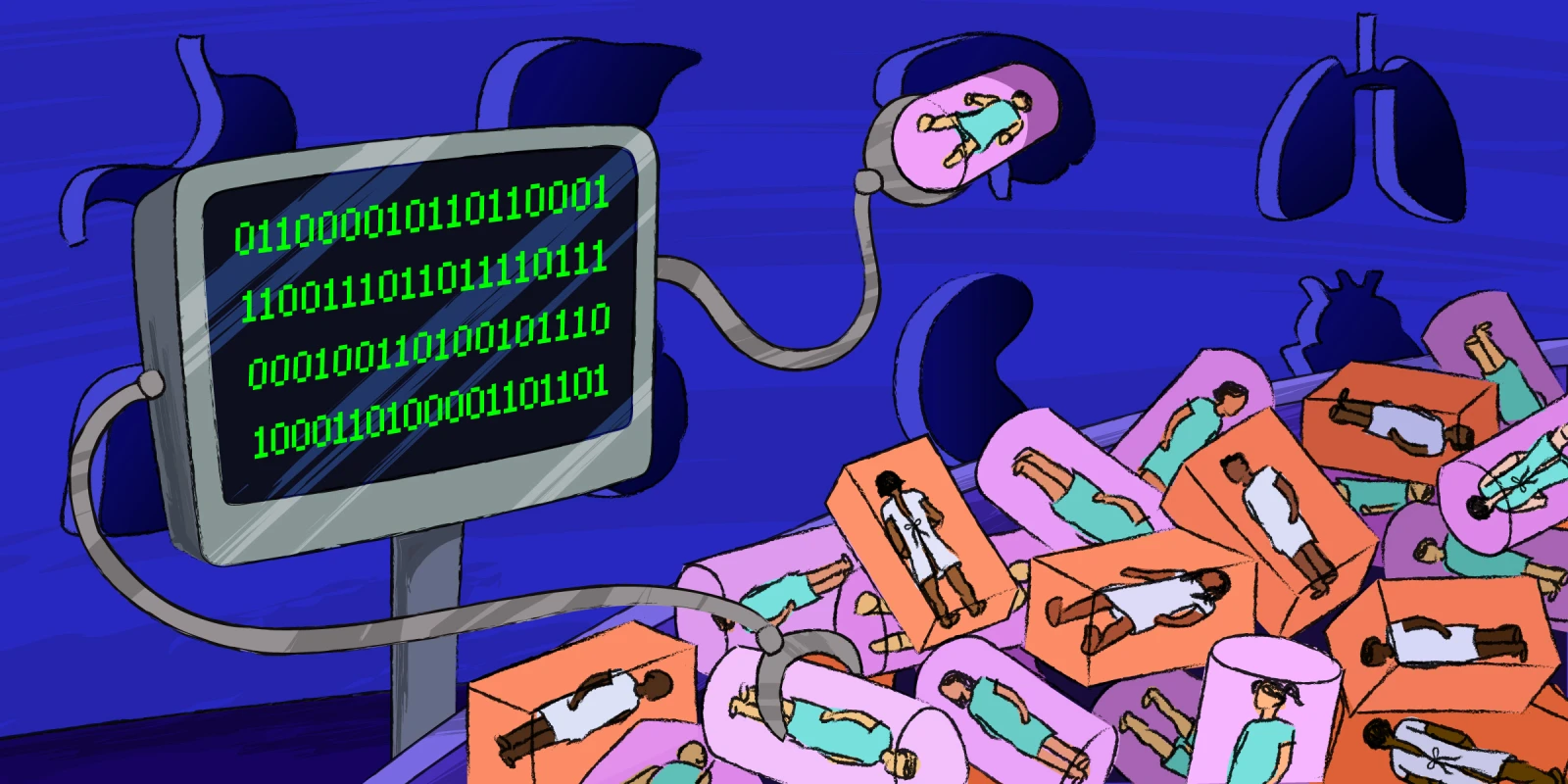

Illustration by Diana Connolly