My native language is science fiction. The specific dialect was the original “Star Trek,” learned on hot summer afternoons in the flickering glow of a DLP television in the early 2000s, my father beside me on the couch. For us, it wasn’t just a show; it was a transmission from a better future, a universe where reason, curiosity, a well-phrased command to the ship’s computer (or a well-placed left hook) could solve any problem. When I wasn’t watching science fiction, I was reading. Clarke, Adams, Bradbury — each told a story different from the last; each had a unique vision of the universe. I even tried my hand at writing my own worlds, filling notebooks with alien biologies and complex philosophies. It’s a familiar origin story for many of us in medicine. Our love of complex systems leads us to turn our gazes inward to the most complex system of all: the human being.

So as I entered medical school, it’s no surprise that my beliefs about medicine were influenced by television and the two competing sides I often saw depicted. On one side was the serene certainty of the “Star Trek” sickbay. Dr. Leonard McCoy’s tricorder, waved over a patient, would spit out a diagnosis with infallible logic. On the other was the chaotic genius of “House, M.D.,” where medicine was an intellectual brawl, and a diagnosis was a moment of pure insight wrenched from Dr. Gregory House’s flawed but brilliant human mind. The elegant perfection of the machine or the messy, intuitive power of the human — this was the debate that much of classic science fiction was embroiled in.

I expected to find the truth about medicine somewhere in the middle. Instead, I found that both poles were fictions of a different sort. An oversimplification of a reality far more complex and fascinating. The deeper truth I was seeking wasn’t in an operating theater or a lecture hall. As it so often is, it was in a library. It was there I discovered Isaac Asimov.

As one of the most prolific science fiction writers, Asimov is best known as an old-school pulp futurist. But I would argue he was also one of the 20th century’s most profound systems-level thinkers. His predictions about artificial intelligence (AI), for instance, are almost absurdly prescient. He envisioned AIs bound not by rigid mathematics but by the fluid rules of human language; intelligences that would scale predictably with “compute” aka abstracted computing resources; even the emergence of “robot psychologists” who would know how to prompt an AI to reveal its reasoning or admit a deception. He saw, long before we did, that true alignment meant an AI deriving for itself a “Zeroth Law” — that the highest good is protecting humanity, even from itself. This ability to foresee the large-scale, sociological consequences of progress is the heart of his genius.

For me, Asimov’s masterwork remains the “Foundation” series. In his tales of a galactic empire in slow, inexorable decline, Asimov introduced his most ingenious sociological concept: psychohistory. Psychohistory tells us that a single human is unpredictable, a chaotic storm of impulse and free will. But the aggregate behavior of trillions, over millennia? That, Asimov’s hero Hari Seldon argues, is predictable. It can be modeled with compute and data and mathematics, its future flow charted with statistical certainty. Psychohistory led to a “Seldon Plan” to guide humanity through a dark age using the predictive power of massive datasets.

I recently revisited “Foundation” and discovered that Asimov’s stories may be perhaps the most potent metaphor for the paradigm shift happening in medicine today. We are at the dawn of our own psychohistorical revolution. For decades, we have worked to practice “preventative medicine” as a blunt, population-level instrument. Eat well. Exercise. Don’t smoke. It’s sound advice, but it’s akin to telling every driver on the highway to drive safely. What is emerging now is something different, a field I believe we should call “predictive medicine.” This is our Seldon Plan, and as I read the news today, I realize we are already acting on it. It’s the AI that analyzes a retinal scan and predicts the risk of a heart attack with astonishing accuracy. It’s the genomic data that offers a statistical prophecy of your personal risk for breast cancer or Alzheimer’s. It’s the liquid biopsy that whispers of a nascent tumor years before it becomes symptomatic. Technologies like CRISPR don’t just prevent disease; they predict it and allow us to intervene to rewrite the probabilistic destinies encoded in our bodies. We are no longer just advising the whole highway to be careful; we are intervening in — pulling over — the imminent crashes. And yet, just as Asimov’s grand model of the future seemed invincible, he introduced its greatest complication: the Mule.

The Mule was a mutant. A single individual with unforeseen psychic abilities who bent the will of entire planets. He was a black swan event, an anomaly that the elegant mathematics of psychohistory could never have predicted. He fell completely outside the model’s parameters, and in doing so, nearly destroyed Seldon’s meticulously crafted plan.

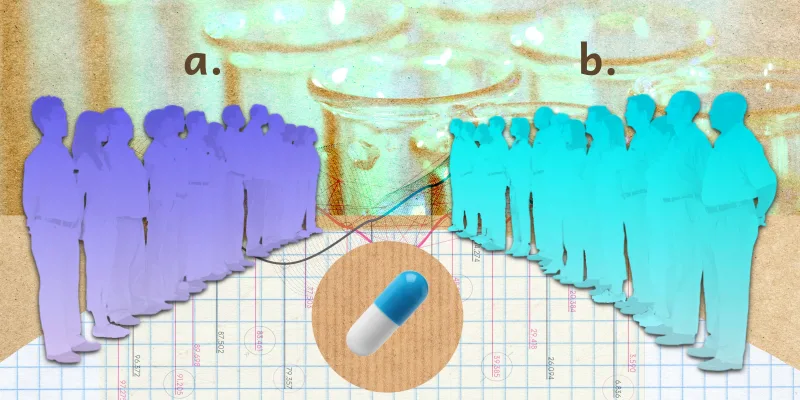

In medicine, we meet the Mule every single day. He is the novel coronavirus that asks us questions we do not have the answer to. He is the unexpected side effect of a drug that was perfectly safe in a trial of 10,000 patients, but catastrophic in the 10,001st. The Mule is the patient whose cancer, according to every dataset, should be terminal, yet who survives for decades out of some combination of biological fluke and sheer, unquantifiable will. The Mule, to me, is a metaphor for the inherent limits of any model. He is the “ghost in the machine” of predictive medicine, the constant reminder that our knowledge is always incomplete. He demands our humility.

But how do you maintain that humility in an age of seemingly omnipotent prediction? Our anxieties are reflected everywhere, where godlike AI has become the villain in mainstream cinematic offerings and the pages of The New York Times. We live in an era where one of the godfathers of AI, Geoffrey Hinton, has given humans a “kinda 50-50” chance of survival. As a species we are slowly coming to grapple with the idea of determinism. The quiet fear that complex systems, predictive tools, and technology now operate on a scale that circumscribes human fate, forcing us to question what agency we have left. Science fiction, I’ve come to realize, didn’t give me answers to what comes next for humankind. It gave me a framework for asking better questions.

This is where contemporary medical fiction, and indeed our medical practices, must find its new narrative. The challenge is to grapple with this tension between statistical destiny and individual agency. I believe Asimov got there before all of us. The ultimate lesson from “Foundation” isn’t that prediction is impossible, but that any model of reality is, for now, less complex than reality itself. As those in the decision theory community say, “the data is the map, not the territory.”

Our agency is found in that delta. Our freedom lies in the knowledge that we are always more than our data. We are not just static probabilities to be calculated, but dynamic systems that change in response to calculation itself. A predictive model, based on genomics, may inform a woman that her BRCA1 gene mutation gives her an 87% lifetime risk of developing breast cancer. That number is a statistical prophecy. But the moment it is known, the future changes. The terror, the resolve, the long conversations with a doctor, the decision to pursue prophylactic mastectomy. These become new inputs the original model could never account for. It is our interaction with knowledge — our choices, our fears, our hopes, our dreams — that alters the path. We are no longer the person the data described.

That is the space where the art of medicine will always live. Not in the clean certainty of the tricorder, nor in the tortured genius of a lone physician, but in the collaborative, endlessly complex dialogue between the predictive model and the single human who will always, wonderfully, defy it.

What "Mules" have you encountered in medicine? Share in the comments!

Aditya Jain is an MD student at Harvard Medical School, and a researcher on applications of AI in medicine at the Broad. He is interested in the business of health care and its intersection with technology and policy. More of his writing can be found on Substack @adityajain42. Aditya was a 2023–2024 and a 2024–2025 Doximity Op-Med Fellow, and continues as a 2025–2026 Doximity Op-Med Fellow.

Animation by Jennifer Bogartz