Brain computer interface as a spinal cord injury technology could finally move us forward after thousands of years of stagnation.

Since the early days of medicine, the classic approach to spinal cord injuries (SCI) has been quite pessimistic. And for good reason. Complete spinal cord injuries—those that occur with total loss of sensory and motor function below the level of the injury—are considered particularly hopeless.

Even if you aren't a superfan of neuroscience or the central nervous system (we'll forgive you, this time), there is a broader message:

Patients with a spinal cord dysfunction do not die directly from the injury. One reason is because SCI is rarely isolated. Brain injuries, and trauma to other organs or vital vasculature, are more the rule than the exception. Patients who survive the initial insult may still succumb to the sequelae. Respiratory failure, skin breakdown, blood clots, pneumonia, renal failure and pyelonephritis from neurogenic bladder, and a host of other deadly problems are always lurking around the corner.

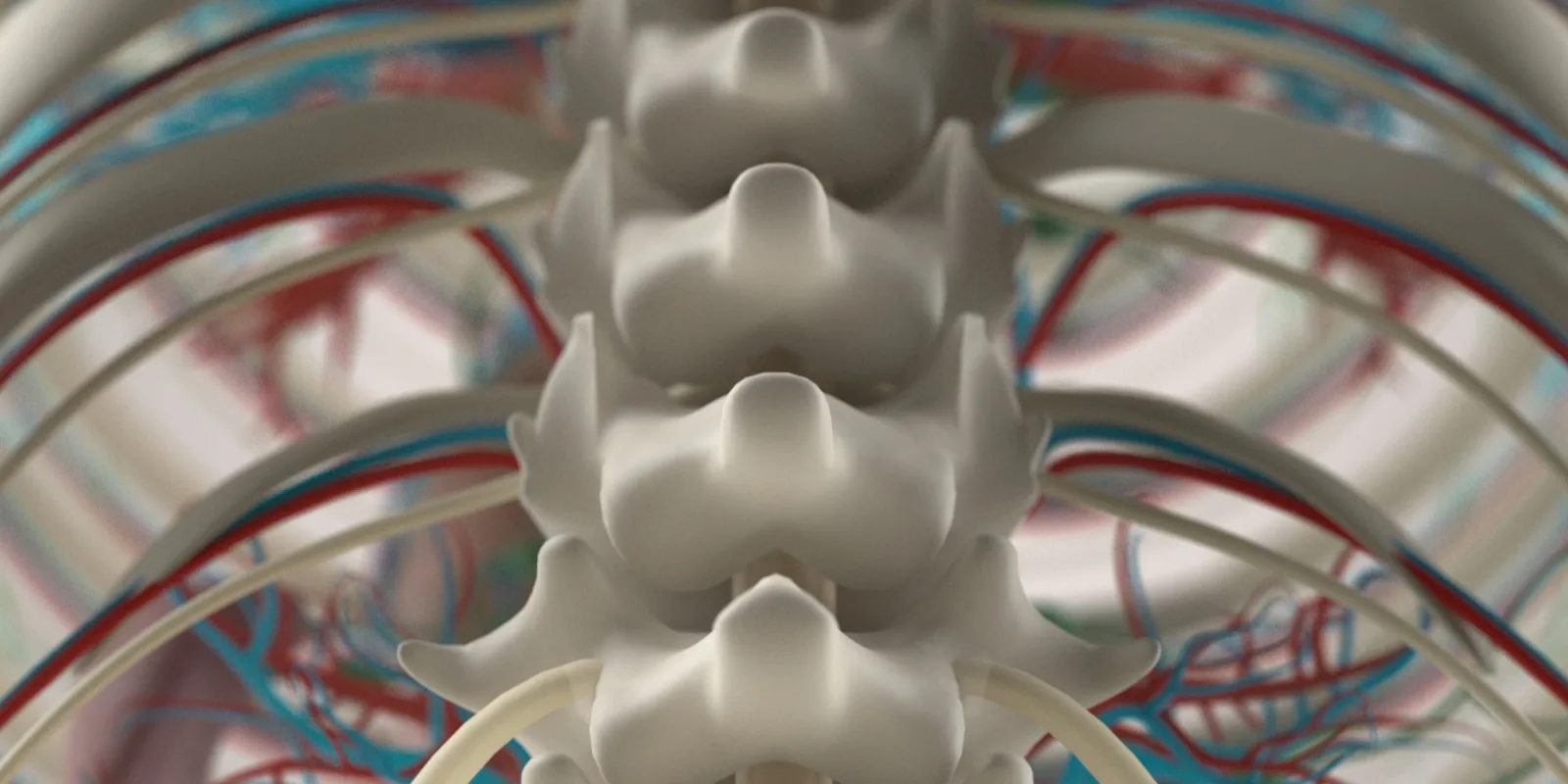

Neurologic injuries evolve over time, especially in the hyperacute and acute phases. Like severe traumatic brain injuries, the acutely injured spinal cord is prone to swelling. Swelling, which heightens damage to the spinal cord by affecting the very structures meant to protect it, often requires immediate intervention. But even when modern medicine is first on the scene, there is no guarantee of a good outcome.

Even in ancient times, thought leaders recommended reduction and decompression of the swollen cord with a laminectomy if some motor or sensory function was spared (though how thought leaders confirmed the correct operative levels without fluoroscopy is baffling).

However, even in these 'dark' times, people easily recognized the mortal danger of a complete SCI. With modern intensive care units and advances in medical science, SCI is less fatal today but recovery of function remains a pipe dream for most.

From Hippocrates' time to today, the prognosis hasn't changed all that much. Indeed, it's easy to see why the medical community is so nihilistic about spinal cord injury.

Consider, for example, those patients who do not recover their ability to walk, use their arms, or breathe on their own again? Or those who have made minimal functional recovery before plateauing? What about patients with complete cord transections, where even a mechanical anastomosis of the cord is a hopeless task?

But more and more, as we look to "new" technology to solve so many of our old problems, research suggests there may be less hopelessness than we believe. A recent study published in the New England Journal of Medicine suggests as much. Four patients with chronic, complete motor spinal cord injuries were able to regain the ability to stand independently after intervention. The benefit was seen with an intensive, 15-week rehabilitation program combined with simultaneous epidural spinal cord stimulation. (Note, however, that the four patients in the study suffered from injuries that were technically incomplete because at least some sensation was spared.)

But brain-computer interfaces ares not just another new technology for an old problem. It may sound like something from a science fiction novel (one you should totally buy and read, by the way), but brain-computer interfaces are emerging as a possible solution. In fact, it it a solution that has been "emerging" since 1973, when Jacques Vidal first wrote about direct brain-computer communication.

After all, what is the spinal cord besides a conduit for transmitting electrical signals from the brain to the arms, legs, and organs? What if you could bypass the damaged area and send the signals to a robotic arm that would never lose another arm wrestling match? The process works like this: instead of sending signals down the usual neuromuscular pathways (the spinal cord's job), a brain-computer interface might receive those same signals coming up from the brain. The signal processing starts with a small "chip" implanted into the cerebral cortex. This component has several short probes that project into the cortex to sit among the neurons. The probes receive signals from nearby neurons when they emit impulses and the chip then transmits those impulses to an amplifier. The amplifier is (currently) a tremendously unwieldy extracranial mass that sends these impulses into a computer. Finally, the computer analyzes the signals and translates them into commands fulfilled by a digital or mechanical output mechanism.

Essentially, this means patients can use their thoughts to control a cursor on a screen, use the internet, communicate, play videogames, and do many other things previously thought impossible.

Brain computer interface as a spinal cord injury technology, if shown to be safe and effective, may be a game-changer. It may also have applications in treatments for other neurological conditions like amyotrophic lateral sclerosis, stroke, or cerebral palsy.

So why isn't it standard of care for all patients with SCI?

First, cool YouTube videos always forget to mention the side effects and drawbacks accompanying such exciting advances. Fortunately, in this case, the adverse events seem to be rare. However, the technology itself has at least three limitations.

- First, studies still need to demonstrate safety and efficacy in the intended population.

- Second, these systems may currently be too burdensome for patients to use on a regular basis (i.e., a large amplifier poking out of your head is not particularly comfortable).

- Third, the technology may not currently provide a perfect user experience (imagine your frustration when the computer cursor lags. Now imagine your brain computer is controlling a motor vehicle—your first technical failure might be your last).

That's not to say the future isn't promising in this area. The Defense Advanced Research Projects Agency (DARPA) recently funded six major brain-computer interface projects, one of which was founded by Elon Musk and another which is being run by Facebook's R&D team. These systems may have a relatively long road to travel before they are mainstream, but it might not be as long of a road as you think.

If you were ever afraid of the government and big corporations putting "chips" in your brain, now is the time to bust out your tinfoil hat.

Ultimately, brain-computer interface technology is important for more than one reason. Yes, it's interesting, but it also speaks to something more. In medicine, it is easy to fall into a routine—we often do our day jobs and treat patients the same way we did five, ten, or twenty years ago.

We can all use a reminder to learn something new from time to time. We can make an effort to remember to stay abreast of upcoming developments in a given specialty's pipeline. We can all do our best to be ready when those developments arrive. And above all, we can all keep pushing forward to improve patient care and avoid becoming hopeless ourselves.

This article originally appeared on Modern MedEd.

Jordan G. Roberts, PA-C is a neurosurgical PA and part-time medical writer who creates awesome medical education for all clinicians.

Disclosures

Jordan G. Roberts is an owner and co-founder of Modern MedEd. Some links in the article may lead to Modern MedEd's affiliate partners. Making a purchase through these links does not increase the price readers pay, but may generate a commission. Please do not make any purchase unless you feel it will truly benefit you.